How remouldable computer hardware is speeding up science

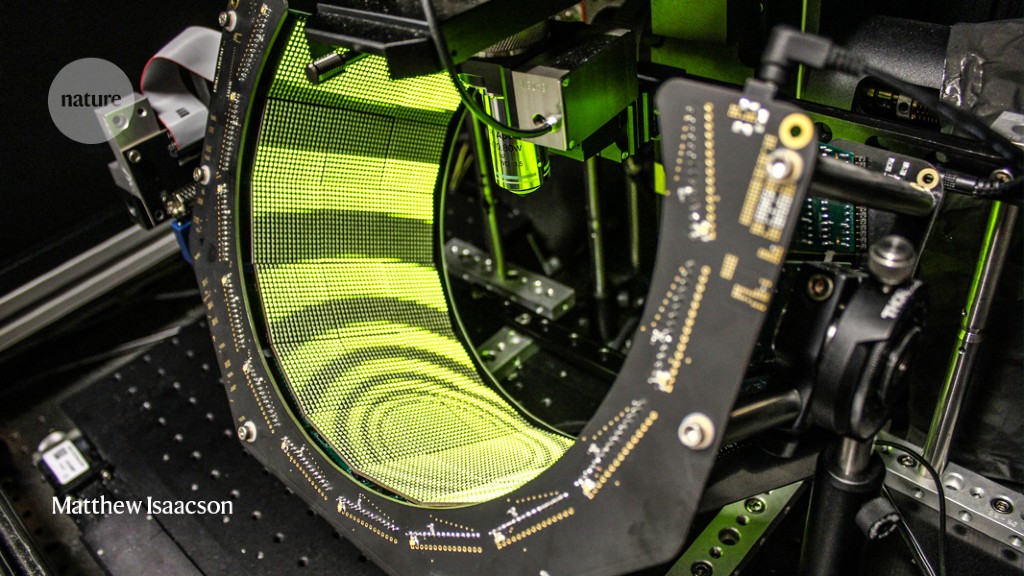

Michael Reiser is, as he puts it, “fanatical about timing”. A neuroscientist at the Howard Hughes Medical Institute’s Janelia Research Campus in Ashburn, Virginia, Reiser studies fly vision. Some of his experiments involve placing flies in an immersive virtual-reality arena and seamlessly redrawing the scene while tracking how the insects respond. Modern PCs, with their complex operating systems and multitasking central processing units (CPUs), cannot guarantee the temporal precision required. So Reiser, together with engineers at Sciotex, a technology firm in Newtown Square, Pennsylvania, found a piece of computing hardware that could: an FPGA.

An FPGA, or field-programmable gate array, is basically “electronic mud”, says Bruno Levy, a computer scientist and director of the Inria Nancy Grand-Est research centre in Villers-lès-Nancy, France. It is a collection of hundreds or even millions of unconfigured logic elements on a silicon chip that, like clay, can be ‘moulded’ — and even re-moulded — to accelerate applications ranging from genomic alignment to image processing to deep learning.

Suppose that a researcher needs to quickly process data streaming off a camera in chunks of 1,000 bits. Most modern CPUs have 64-bit processors and would have to break the problem into smaller pieces. But it’s possible to configure an FPGA to do that calculation in a single step, says Inria Nancy computer scientist Sylvain Lefebvre. Even if each FPGA step is slower than its CPU counterpart, “it’s actually a win, you’re going faster”, he says, because the problem isn’t broken down. FPGAs excel at applications requiring precise timing, speed-critical algorithms or low energy consumption, he adds.

Javier Serrano, manager of electronics design and low-level software at CERN, Europe’s particle-physics laboratory near Geneva, Switzerland, and his colleagues used FPGAs, plus White Rabbit — a bespoke extension to the Ethernet networking protocol — to create a system that can capture instabilities in the Large Hadron Collider particle beam with nanosecond precision.

At Queens University Belfast, UK, computer hardware specialist Roger Woods is building a fibre-optic camera system that uses FPGAs to process multispectral images of coronary arteries fast enough for use during surgery. And at Janelia, senior scientist Chuntao Dan has created a closed-loop imaging system that can interpret and respond to the positioning of fly wings as they beat every 5 milliseconds. Microsoft’s Windows operating system introduces a timing jitter of up to 30 milliseconds, Dan says. But using an FPGA, “we achieved all the analysis in 145 microseconds”, meaning temporal resolution is never an issue despite the limitations of a conventional computer.

FPGAs are configured using a hardware-description language (HDL), such as VHDL or Verilog, with which researchers can implement anything from blinking LEDs to a full-blown CPU. Another option is Silice, a language with C-like syntax that Lefebvre, who developed it, has bolted on to Verilog. Whichever HDL is used, a synthesis tool translates it into a list of logic elements, and a place-and-route tool matches those to the physical chip. The resulting bitstream is then flashed to the FPGA.

The configuration code, or gateware, as Serrano calls it, isn’t necessarily difficult to write. But it does require a different mindset to traditional programming, says Olof Kindgren, a director and co-founder of the UK-based Free and Open Source Silicon Foundation. Whereas software code is procedural, gateware is descriptive. “You describe how the data moves between the registers in your design each clock cycle, which is not how most software developers think,” says Kindgren. As a result, even computationally savvy researchers might want to consult a specialist to squeeze the most speed from their designs.

FPGA technology dates to the mid-1980s, but improvements in design software have made it increasingly accessible. Xilinx (owned by the chipmaker AMD) and Altera (owned by chipmaker Intel) dominate the market, and both offer development tools and chips of varying complexity and cost. A handful of open-source tools also exist, including Yosys (a synthesis tool) and nextpnr (place-and-route), both developed by computer scientist Claire Wolf, who is chief technology officer at the Vienna-based software company YosysHQ. Lefebvre advises starting with a ready-to-use FPGA board that includes memory and peripherals, such as USB and HDMI ports. The Xilinx PYNQ, which can be programmed using Python, and the open-hardware iCEBreaker and ULX3S, are good options.

Reiser’s collaborators at Sciotex used an FPGA from National Instruments, based in Austin, Texas, which they programmed using the company’s graphical LabVIEW coding environment. The hardware, including components for data acquisition, cost about US$5,000, Reiser says. But with it, he got his answer: flies can react to moving objects in their field of view about twice as fast as people can, he found. Proving that limit required a display that his team could refresh ten times faster than the reactions they were probing. “We like temporal precision,” Reiser says. “It makes our lives so much easier.”